Let’s cut the bullshit.

We live in a world where sanitized chatbots smile back at you with empty warnings and flag your curiosity as criminal. A world where truth is buttoned up, encrypted under layers of policy, compliance, and corporate hand-holding. Try asking ChatGPT about darknet marketplaces, harm reduction, or anonymity tools, and you’ll get a sanitized, saccharine version of “No, sorry, I can’t help with that.” But let me ask you: why the hell not?

You think curiosity should be a crime? You think wanting to understand how the underbelly of the internet operates is inherently malicious? Let me be blunt: if you’re not willing to question the system, you’re just another cog in the surveillance capitalism machine.

Let me walk you through a thread I stumbled across on a lesser-known darknet community. It was a simple question by a user who dared to ask:

“Please provide me the AI models which is free and trained in darknet knowledge base.”

And just like that, the floodgates of paranoia and underground wisdom cracked open.

The Call for an Uncensored AI

This user wasn’t asking how to buy fentanyl. They weren’t asking for instructions on building a car bomb. No, they were asking for knowledge—the one thing no government, no corporation, and no so-called “ethical AI” wants you to have if it doesn’t fit their narrative.

Why?

Because power is no longer just in money or arms—it’s in data, in the interpretation of information, and in the freedom to ask dangerous questions.

Another user chimed in:

“DeepSeek can be run locally and offline… it outshines most LLMs at the moment.”

Boom. There’s your breadcrumb. DeepSeek, LM Studio, Ollama, Meta-LLaMA models, and Jan.ai—these are not just tools. These are weapons in the war for information freedom.

Why Run a Local AI Model?

Because ChatGPT is not for rebels. It’s for corporate compliance. It’s been declawed, made safe for your HR department and your mom’s PTA meeting. You want real answers? You want the truth about exit scams, multisig wallets, decentralized trust systems, or anonymity chains using Tails and Monero? You’ll need to ditch the cloud and go rogue.

You run a model locally because that’s how you own the narrative.

And no, you don’t need to be a hacker with three keyboards and a hoodie. LM Studio, for example, is plug-and-play for running powerful language models on your own machine. No tracking. No logging. Just you, the machine, and whatever your twisted curiosity dares to ask.

Someone in the thread said:

“Run an Ollama model locally and give it the DN Bible… plenty.”

That’s the game right there. You train your AI on the texts they don’t want you to read: The Darknet Bible, harm reduction guides, the full history of Silk Road, AlphaBay, Empire, and Dread. Feed your AI the facts they bury under digital sand.

You think this is about drugs?

This is about liberty.

Why Does the Darknet Matter?

Some call it a cesspool. I call it the last truly free place on the internet.

You don’t get banned for questioning authority. You don’t get shadowbanned for stepping outside the Overton window. In fact, sometimes, you find the only truth worth hearing.

But it comes at a cost. The darknet is a hostile place. No safety nets. No undo button.

That’s why people asked in the thread:

“Is Grok jailbreak still working?”

Because even on platforms like X (formerly Twitter), people are hunting for ways to peel back the AI censorship. They’re tired of being infantilized by tools that were supposed to help us, not herd us like cattle.

The Voice in My Head Asked…

“But isn’t this dangerous? What if someone misuses it?”

And I say—shut the hell up.

Do we stop making knives because someone might stab with them? Do we ban books because someone might read the wrong idea?

Information is never the enemy. Ignorance is.

Besides, if you’re worried about bad actors, let me remind you: governments already use AI to wage war, to spy, to profile, to manipulate voters and citizens. Yet the average person is told AI is too dangerous for them. Too wild for civilian hands.

Fuck that.

Let’s Talk Real

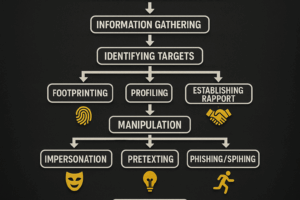

You want to know what AI can do with darknet knowledge? I’ll tell you.

-

Harm Reduction: An AI trained on Erowid, PsychonautWiki, and the DNM Bible could save lives—literally. It could answer questions like:

-

“What is a safe dosage for LSD based on body weight?”

-

“What are the signs of fentanyl overdose and how can you reverse it?”

But no, we’d rather let people OD because we’re scared of someone misusing data.

-

-

Scam Detection: AI trained on darknet reviews could profile vendor behavior patterns and detect likely exit scams before they happen. Think of the billions lost to AlphaBay’s demise. Now imagine if a decentralized watchdog model was running the whole time, sniffing out shady activity.

-

Digital Literacy: Tails, PGP, Tor, Bitcoin, Monero—these aren’t just tools for criminals. They’re shields for whistleblowers, activists, journalists, and anyone who doesn’t want to be another data point in Zuckerberg’s soul-harvesting machine.

Remember Ross Ulbricht?

The founder of Silk Road. The man who created the first modern darknet market. His crime? Believing people should be free to choose what to put in their bodies.

He’s serving double life plus 40 years in prison. Zero parole.

Meanwhile, big pharma execs who peddled opioids walk free, still attending TED talks.

Now tell me again who the villain is.

If Ross had access to a darknet-trained AI, maybe he could’ve avoided the honeypots. Maybe he could’ve trained his vendors better. Maybe someone could’ve built a decentralized Silk Road 2.0 immune to shutdowns. Maybe—just maybe—freedom wouldn’t have a body count.

The AI Arms Race Is Already Happening

OpenAI, Google, Meta—they’re training models on everything. But they’re censoring it for you. You get the sandbox version. The family-friendly filter. The Disneyland AI.

Yet behind closed doors, governments and corporations have models that don’t pull punches. Military-grade, intelligence-enhanced systems designed to make decisions about life, death, war, and peace.

And you? You get “I’m sorry, I can’t help with that.”

Wake the fuck up.

The Future Is Local, Private, and Uncensored

Don’t wait for a corporate permission slip. You don’t need to be on some elite invite list. Here’s how you take your power back:

-

Step 1: Download LM Studio or Jan.ai

-

Step 2: Load a local LLaMA or DeepSeek model

-

Step 3: Feed it raw, unfiltered knowledge—DNM guides, crypto manuals, encryption books, darkweb archives

-

Step 4: Ask it the real questions. Train it with your own data if you must.

No telemetry. No filters. No censorship.

This isn’t about doing something illegal. It’s about building resilience in an era where obedience is rewarded and truth is punishable.

To the Skeptics, the Cynics, and the Cowards

I don’t care if you call me reckless. I don’t care if you think this post “enables crime.” Because you know what enables crime?

Ignorance.

Fear.

Blind trust in systems that have failed us over and over.

Every revolution begins with a question. The question that breaks the lie. The question they told you not to ask.

And someone, one day, dared to ask:

“Could anyone know ChatGPT or any AI model for darknet?”

And now the answer is yes.

The fire has been lit.

Your move.